Breaking down Facebook’s (lack of) oversight

The company has an enormous impact on our politics, and their actions remain largely unchecked

Last week, Facebook again endured a wave of negative press, this time for locking out transparency researchers from access to its political ads archive. The company’s current PR crisis - one of too many to count this year - comes as they reported record earnings in Q2. We’ve repeatedly covered how Facebook has an out-sized impact on our politics, and these days, it seems like they just do what they want with little repercussions. With little hope for meaningful regulation on the horizon, how can we hold the company accountable?

In this week’s FWIW, we sit down with the Real Facebook Oversight Board’s Kyle Taylor to discuss the company’s recent actions around transparency and their impact on our democracy. But first...

By the numbers

Here are the top 10 political ad spenders on Facebook and Instagram last week:

Topping the list of advertisers last week was PhRMA, which spent over half a million dollars on a handful of ads opposing changes to Medicare Part D. Val Demings also significantly increased her spending, primarily running national grassroots fundraising ads.

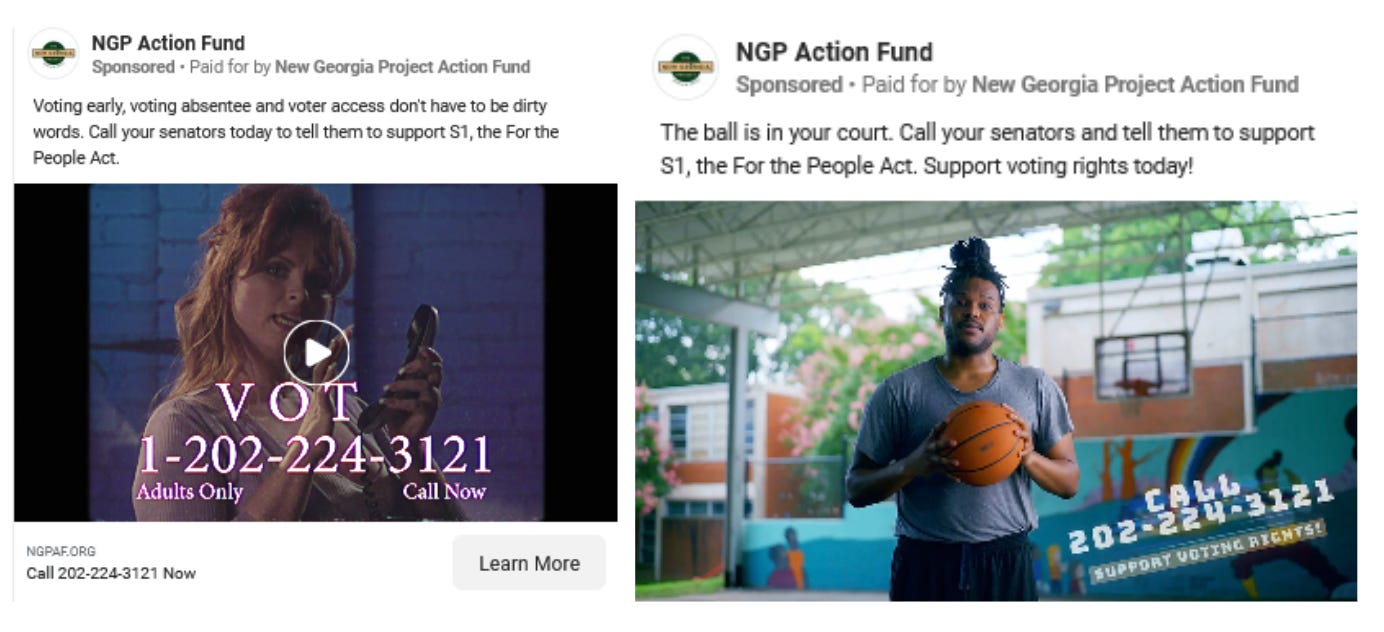

Still fighting the good fight for the “For the People Act” is the New Georgia Project Action Fund, which ran a pair of video ads on Facebook urging young adults to call their senators to tell them to support the bill. The campaign only lasted for a few days, but they spent a whopping $279,862 on it from August 6th to August 10th, and targeted mostly young adults in Arizona, Georgia, Texas, and West Virginia.

On the other side of the aisle, a handful of GOP members of Congress and candidates are using false, fearmongering Facebook ads to fundraise ahead of next year’s elections. The ads falsely tie the Delta COVID surge to the recent increase in immigrants trying to cross the southern border. According to the Washington Post, Facebook removed similar ads last year, claiming “we don’t allow claims that people’s physical safety, health, or survival is threatened by people on the basis of their national origin or immigration status.” Somehow these new ads are okay. Make it make sense, Facebook!

So far, at least seven Republicans are running these types of ads: Sens. John Barrasso and Kevin Cramer, Reps. Ted Budd, August Pfluger, Jake LaTurner, and Greg Murphy, and Nevada congressional candidate April Becker.

Meanwhile, here are the top 10 political ad spenders on Google last week:

GOP gubernatorial candidate Glenn Youngkin rolled out a six-figure Google and YouTube ad campaign last week that tries to have it both ways with law enforcement. While some of the banner ads they’ve started running call for reforming police and parole boards, others claim that “reincarceration saves taxpayer dollars” and “law enforcement needs more resources, not less.” One of their YouTube ads even goes so far as to promise that “qualified immunity will never go away.”

We’ve got more on Youngkin’s focus on law and order messaging in this week’s issue of FWIW VA.

...and FWIW, here’s political ad spending on Snapchat so far this year:

The 2022 midterms will be here before we know it. If you’re one of our awesome readers who loves getting in the weeds on digital political tactics and wants to get involved, consider applying for the next Arena Academy by this Sunday, August 15th! You’ll get hands-on campaign experience that’ll prepare you for the real deal next year. Interested? Check out the Arena website to apply and learn more.

Breaking down Facebook’s (lack of) oversight

Facebook’s business may be booming, but the social media giant has been under constant attack for its failures to appropriately manage harmful and misleading content on its platform. To recap, just over the past few months:

Our research showed the most engaged vaccine posts on Facebook were negative or skeptical;

Facebook allowed Donald Trump to resume advertising on their platform, despite claims to the contrary;

A new report showed that Facebook’s political ad ban in the final stretch of the 2020 election hurt Democrats, did little to curb misinformation;

The President of the United States said the company was killing people;

Facebook had discussions about restricting access to its Crowdtangle tool because the metrics looked bad for the company;

Facebook locked out transparency researchers from using its transparency tools.

With little government regulation holding the company accountable, there is a growing list of organizations and individuals who have been monitoring Facebook’s failures and rallying the public to take action. Groups like Accountable Tech and Decode Democracy are monitoring platform policy failures while educating and organizing people online. Another is the Real Facebook Oversight Board (RFOB), which recently released its “Quarterly Harms Report”, in conjunction with a direct action outside of Facebook’s DC office.

We sat down with RFOB’s Kyle Taylor for a Q&A on the current state of public pressure on the company, its commitment to transparency, and its impact on our politics (this transcript has been edited for clarity and style):

Kyle at FWIW:

Some recent estimates say around 70% of Americans are on Facebook, which means that what happens on their platform can significantly impact our politics and society offline. In terms of the negative impacts - hate speech, misinformation – what responsibility does Facebook bear?

Kyle Taylor, RFOB:

Facebook is a business, and it will take actions based on maximizing profit or primarily pushing its business interests. That’s what makes it not just a marketplace of ideas, because their objective is to sell ad space. They're an ad business. So in terms of what responsibility they have to all of us, I think the question instead needs to be from a societal perspective, what do we need Facebook’s responsibility to be? We’ve had this sort of 20 years period of tech exceptionalism that has said progress for the sake of progress is good. We’ve come to a place where these exchanges between governments or societies and these tech companies are like a negotiation - as opposed to what it should be, which is a democratic society deciding how they want their society to exist online and telling tech companies to meet those requirements.

I would argue that Facebook’s responsibilities should be much greater than most other private businesses because they're effectively the digital public sphere. You mention that something like 71% of Americans have a Facebook account, among American adults, it’s closer to 81%. Many of those people are voters, and active user numbers on other platforms like Twitter aren’t even close. Facebook, WhatsApp, Instagram – effectively is the digital public sphere and it's entirely run by a private company whose primary interest is profit. We just have to ask ourselves, is that in the best interest of society? We would argue, no. And as such, how do you mitigate it?

Kyle at FWIW:

Until 2016, even many Americans who pay attention to politics didn't recognize the enormous impact that Facebook had on our democracy. 2016 was kind of like a turning point where people started to realize that, hey, certain really bad things are happening here, and maybe we need some changes. So when it comes to our society telling Facebook what we need in terms of moderation or transparency, we're still pretty early on in a sort of education phase. Do you think Facebook even recognizes its impact in that regard? One thing we saw last year during the election cycle is that the company would make numerous last-minute changes to things like their political advertising policies that could tip the scales in favor of one side or another. And the company seemed completely unaware of the downstream impacts of their changes.

Kyle Taylor, RFOB:

I think that Facebook absolutely knows the power that they have, but I am unsure of whether they have a clear agenda about what they're doing with that power. I would say the primary thing that they're trying to do in the context of elections is to avoid regulation. It doesn’t necessarily mean Democratic control or Republican control of government. In fact, it usually means split control – because divided government typically prevents any type of regulatory action from taking place. There is nothing the government can do really to force Facebook to change its policies at this very moment.

On the 2016 question really quickly - I think it's a really important thing because this doesn't happen in isolation. In 2016, we saw the emergence for the first time in a generation of a candidate that was in no way attached to reality, and democratic society typically depends on a shared reality in order to function.

If you don’t care about telling the truth, and there is this amazing tool that lets you say anything you want to people directly with no recourse, then it's a perfect storm, right?

What we saw is that so much of our society is built on norms and not laws. And so I'm glad that after 2016, everybody's now woken up because action is required immediately to make sure these things don’t happen again. I believe the first step would be transparency. Our current situation in terms of transparency on Facebook is that Facebook provides us with data that we're supposed to just sort of accept as true. “Oh, we took down 2 billion fake accounts.” Well, how many didn't you take down? We’re supposed to just take their words for it.

Kyle at FWIW:

We obviously use their transparency data for our newsletter every week, whether it's for advertising spend or organic post engagement. There was recently a big story in the New York Times about how Facebook execs have discussed how to restrict access to transparency tools like CrowdTangle because the data made them look bad. Then, last week, researchers from NYU were locked out of their transparency library entirely, due to a dispute over how they were using the tool. What does that say about the company’s stated commitment to transparency?

Kyle Taylor, RFOB:

I think there are a few things that are really significant. The first is that the main transparency tools of Facebook are owned by Facebook, right? So a very easy, quick fix would be antitrust action that says something like CrowdTangle needs to be its own company. Also, implementing policies that would prevent the company from restricting access to platform data. You know, something that a regulator would do in a European country that you hope you can get to in the American context.

The second thing I find interesting with the NYU Ad Observatory piece last week is the timing. It’s been reported that Facebook restricted the NYU Ad Observatory’s access within a few hours of them notifying Facebook that they were going to be looking into disinformation around the January 6th insurrection. This was timed alongside the Congressional select committee also looking into the January 6th insurrection. That timing is just too convenient for me to see it as a coincidence.

All of this says to me that Facebook has something to hide and they also know that there is no way to force this data out of their platform at the moment – not the FTC, not Congress. For transparency, we’re at the mercy of these corporate dictators, right? It’s like “sure, use CrowdTangle to see what we will allow you to see until it's no longer convenient for us.”

Kyle at FWIW:

In terms of revenue growth, Facebook just had one of its best financial quarters ever. At the same time, they’ve had a really terrible, terrible year in terms of PR - just taking it on the chin with negative news story after news story. I totally understand what they’re doing re: transparency, because so much of their bad press is caused by their own transparency data that they put out there.

They put out a press release or pitch an AXIOS story saying they're going to do things like crack down on hate speech or COVID misinformation. And then a couple of us just take a cursory glance - not even really deep dives - into their ad library or into CrowdTangle, and we see that that kind of information is alive and well. So, from their own self-preservation perspective it makes sense that they would start to curtail access to that data a bit, because it continuously damages the company’s reputation.

We've also found that particularly in the American political space, the only way that we've been able to see change with Facebook is through PR campaigns and public pressure from major political actors. That’s why we see Facebook spending all of this money trying to work the refs. They're sponsoring pretty much every newsletter sent out by Politico or AXIOS. They’ve staffed up their lobbying shop enormously. What’s their end game there, and what kind of pressure works against the company?

Kyle Taylor, RFOB:

I believe 2020 was the first year that big tech spending generally exceeded that of every other industry in Brussels and DC. They’re one of the largest interest groups in DC. In our report last quarter, we noted the number of people in public policy that they were currently hiring is over 1,300 globally. In the US, it's literally like one public policy manager per member of Congress. Of course, their agenda is to avoid undesirable regulation, because like I mentioned before, they're an ad business. Their customers are advertisers, we’re their product, and their only objective is to maximize profits as a private company.

So as you say, they're returning record quarterly profits. That speaks volumes about how the bad PR against the company - from the organizing of an insurrection on their platform, to the facilitating of a genocide against the Rohingya, and, you know, them calling Kenosha an “operational mistake” - does not actually have any impact on their ability to do business.

Pressure on Facebook, business-level pressure, is so difficult. They respond to bad PR with just spin upon spin for a media moment. In the US, I think they're looking at the political landscape and thinking regulation is fairly unlikely in the near term, so they’re just treading water.

If they can just keep this spin going, they’ll make it through the next media cycle or hurdle. That’s why it feels like real change needs to come from oversight, transparency and accountability that’s forced upon them.

We have to remember as well, they have an addictive product that is a monopoly. Something like 89% of all social media interactions happen on Facebook. And we know the design of the platform is built to keep you on it as long as possible. The business interest is to keep you on, and the stuff that keeps you on is often extremist or harmful.

The last thing I'll say is that solving these problems isn’t as complicated as Facebook wants us to believe. Report after report shows that disinformation is consolidated to a limited number of super spreaders. Election misinfo on Twitter declined significantly after they deleted Donald Trump’s account - suddenly it becomes like a functional information space.

Facebook would want you to believe that this is somehow a secret attempt to crush free speech or it’s a problem that just can’t be controlled. They’re wrong.